Building software can be challenging, but getting ahead of your error triaging workflow can be even harder. When it comes to uptime, reliability, and ultimately avoiding potential revenue loss, seconds matter when fixing issues that affect your customers.

To resolve issues faster, you need rich, contextual data within a unified experience to ensure that you’re prioritizing and resolving the most mission critical errors quickly. The latest updates to the New Relic errors inbox provide the context to accelerate your error resolution workflows to help you reduce mean time to resolution (MTTR) by focusing on resolving issues that impact your customers.

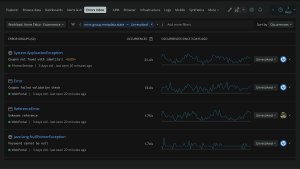

Prioritize errors with users impacted and alerts

Our latest errors inbox enhancement helps you determine a level of importance for different error groups. You can now see the number of users impacted by an error group and create alerts based on this metric. Then you can focus on the errors with the highest ROI.

You can generate New Relic alerts based on the number of users who are affected by errors. See our simple tutorial in Get started setting alerts based on users impacted.

View linked traces with error instances and get to the root cause faster

Modern applications have multiple components or services which makes it challenging to identify the root cause of an issue. This is especially hard when you don’t have a full understanding of how different services interact across a system. You might be left to decipher specific error messages to identify where things broke down, or switching tools to make sense of why an issue that’s occurred compromised application performance.

Now you can access distributed tracing directly from your errors inbox, so you can derive insights and analyze errors within a single view.

Select an error to view the correlating traces to get an overview of the entire request and a summary of its duration, the number of spans, and the errors that occurred.

Select Explore to get more detailed context.

Then you can open up a more granular view of the request, with complete trace details and a visual representation of all the spans that were recorded. Use this visualization to quickly identify where things went wrong and focus on getting that issue resolved faster.

Collaborate in-context at the entity level with Slack

You might have read about our Slack integration. We’ve taken it a step further and now support Slack notifications all the way down to the entity level, allowing for enhanced collaboration across your team.

This enhancement to the integration provides notifications for your scoped inbox, which is specific to a service or application. You can now focus and collaborate on the applications owned by you and your team.

For more details on connecting errors inbox with Slack, watch this video:

Or follow these steps:

- If your Slack workspace does not have the New Relic app installed, do that first.

- Open one of your New Relic error inboxes, and select the notification settings icon (that looks like a bell) in the top right corner.

- If the Slack button is off, select it to turn it on.

- If no workspaces are available, select the plus button to enable Slack.

- After you authenticate, you’ll be able to select a workspace and specific channel to send notifications to.

- Select Test to ensure messages are sent to the right channel.

Get started setting alerts based on users impacted

To get the most out of this new functionality, you’ll first need to make sure you are sending user impact data to New Relic. Then, you’ll configure the alert by determining:

- The error-producing entities to monitor and alert on

- The alert signal that is most valuable for your use case

- What threshold value is going to be immediately valuable to your organization

Here’s a quick tutorial with three basic steps:

1. Determine the entity.guid of an alerting service.

In general, you can create alerts based on any NRQL string. For this tutorial, you’ll create an alert on the error-producing entities that are impacting customers. If the entity you want to alert on is an APM service, select APM & services in the navigation, and then select the service you want to alert on. Find the entity.guid for the service by selecting See metadata and manage tags, as shown in this screenshot:

Then copy the entity.guid, as shown in this example:

Entities exist on (but are not limited to) all error producing sources and workloads.

For more information, check out our documentation on What is an entity and how to find one.

2. Create a query to get the number of users impacted.

To create an NRQL query that returns users impacted, first determine which services you want to include in your alert, and get their entity.guids.

After you’ve determined the entity.guids, go to the query builder, and insert this NRQL string:

SELECT uniqueCount(newrelic.error.group.user_impact) FROM Metric WHERE metricName='newrelic.error.group.userImpact' AND entity.guid in(entity.guid1, entity.guid2, …) FACET error.group.guid TIMESERIESReplace entity.guid with the GUIDs of services that you want to alert on.

This query returns the count of unique users impacted by each error group produced by the services for the entity.guids you provided. Next, you’ll need to define an alert that will trigger when the number of unique impacted users has exceeded a threshold.

Graphing this data in the query builder will give you an opportunity to adjust your query string if you’d like.

After you have the string that produces the signal you want to alert on, select Create alert. A window is displayed where you can configure your NRQL alert condition.

3. Create a NRQL alert based on the users impacted metric.

To create an alert based on the number of users impacted at your instrumented services, first you’ll need to create a NRQL alert condition. Here's how you do it.

In the Edit an alert condition window, you define thresholds that trigger the condition. Violations of the condition are highlighted and will help you determine the best threshold for your particular use case. The ideal threshold varies by use case, but a good starting value will be the smallest value and violation duration that doesn’t trigger an alert.

Tuning the aggregation window might also help reduce noise and produce more actionable alerts:

If the window is too short, a small threshold might cause false alarms from a few transient errors, while a large threshold might miss a moderate but constant stream of users who are impacted. For more information, see our docs about window duration.

Now you’re ready to save. Scroll down or collapse the alert configuration sections, and select Save condition.

Then the alert policy is created and enabled with default settings. Check out our policies documentation for more information.

Note: The NRQL string that is used is faceted by error group. This means that if any error group exceeds the threshold value for the violation duration, it will trigger an alert. It might be more appropriate for your use case to measure the total users impacted, not just the total users impacted by each error group. In that case, you can remove the FACET clause. See this sample query:

SELECT uniqueCount(newrelic.error.group.user_impact) FROM Metric WHERE metricName='newrelic.error.group.user_impact' AND entity.guid in(entityGuid1, entityGuid2, …)Also note that the entities you use in one alert condition don’t need to be of the same type.

Continue your errors inbox journey and get ahead of your issues

Learn more about how the errors inbox in New Relic can help speed error prioritization and resolution by watching our demo video and reading through our documentation.

Errors inbox is available for core and full platform users. If you’re not using New Relic yet, sign up for a free account. It includes 100 GB/month of free data ingest, one free full-access user, and unlimited free basic users.

The views expressed on this blog are those of the author and do not necessarily reflect the views of New Relic. Any solutions offered by the author are environment-specific and not part of the commercial solutions or support offered by New Relic. Please join us exclusively at the Explorers Hub (discuss.newrelic.com) for questions and support related to this blog post. This blog may contain links to content on third-party sites. By providing such links, New Relic does not adopt, guarantee, approve or endorse the information, views or products available on such sites.